Introduction

Announcements of a new mobile network technology generation (5G) have triggered a series of alarming claims about connected health threats. This is nothing new: this phenomenon is with us since the 1990s; those alarming claims have been made around the launch of UMTS (3G) in the year 2000, and with the start of LTE in 2010, too. This time it is quite a bit heavier than in the past, mainly due to the existence of social networks, which tend to spread alleged “bad news” and alarming stories literally at light speed. Public opinion is first and foremost against the cell phone towers as they are the visible landmarks of the technology. Each time a tower for a cellular network is built or planned to be built in a city or near a rural settlement, there is a new discussion about health issues of mobile phone or network radiation. It is about time to put things back into perspective. I’d like to deal with the reality of mobile network radiation in this post.

My first statement here is: Major exposition of humans from mobile radio technology is from handheld phones, not from base stations!

The reason is very simple. The power of electromagnetic radiation goes down with distance extremely fast when moving away from the transmitter. See the post https://www.grandmetric.com/blog/2018/02/20/explained-pathloss/ from Mateusz Buczkowski in this blog for a general introduction of the concept of path loss.

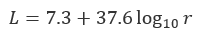

For a frequency of 1 GHz (typical range for mobile phone networks) the path loss measured in Decibel units is

where r is the distance from the source to the measurement point in meters. This is a formula due to the Japanese scientists Okumura and Hata, who have done endless series of measurements and have compiled them into empirical formulas. The Okumura-Hata formulas are internationally accepted and part of the mobile phone standards and acceptance rules. There are variants for different environments (city, rural) and frequencies, but they all show the same pattern. The formula in very simple terms says: the radiation power goes down almost with 4th power of distance.

Tower/base station perspective

Let us try it out: Antenna transmission power is anywhere between 250mW (expressed as 24 dBm) for a Small Cell, and 120W for the largest 5G MIMO arrays (which is 50 dBm). A typical 2G, 3G, or 4G antenna has got a transmission power of 20W (43 dBm).

Let us quickly apply that to a user, standing in a relatively small distance to the transmitter:

A Small Cell is comparable to a WLAN access point, and you can come pretty close. We assume a distance of 10 m and get a path loss of 7.3+37.6=44.9dB. Subtraction of path loss from transmission power gives 24dBm – 45dB = -21dBm, which corresponds to approximately 8 µW. (µW is the 1 millionth part of a Watt)

A 5G macro cell antenna will be placed up on a tower or on the roof of a high building. Height above ground is thus some 30 m, and we assume a position in 100 m distance from the antenna. Path loss can be calculated to as =82.5dB. The received power is 50 dBm – 82.5 dB = -32 dBm, which is less than one µW.

A light bulb has about 60W energy consumption, and the emitted light and heat will be in that range. Since hat home your distance to a light bulb will be 2-3 meters. The impact from the light bulb on your body will be more than a million times higher. In is general consensus in medical and biological research that the only impact of microwave radiation, as the one used in mobile networks, is by heating up the target object.

Amendment

This post made a reference to the above. The author used some of my figures to create a true „horror“ case of mobile radiation, where radiation in the kilowatt range would hit humans. I want to show with this amendment why his construction is a misconception. The blogger basically uses his understanding of the term „antenna gain“. He claims that I had omitted to include antenna gain in my high-level calculations, and that antenna gain would turn my innocent-looking figures into a real power monster.

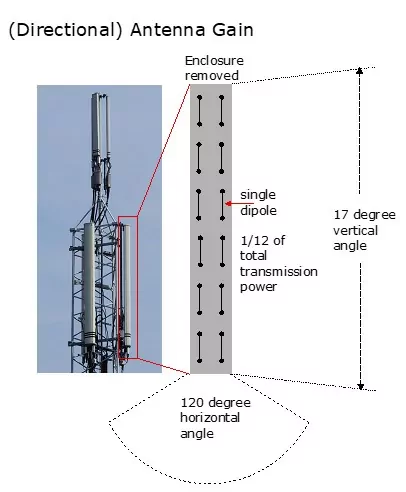

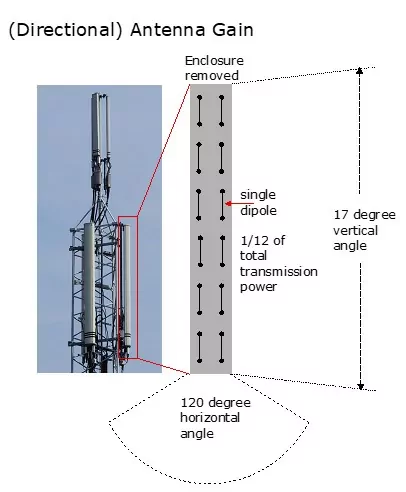

What is antenna gain? The term actually sounds like a hidden amplifier, which is a complete misconception. Instead, „directional gain“ would be a much better fit, and should be used in the technical literature. Antennas are passive, with no electrical power connected. They just receive the radio frequency signal from the transmission circuitry and convert it to electromagnetic waves. Since it doesn’t make a lot of sense to radiate in all spherical directions (upwards, downwards) antennas are constructed to focus the radiation into a solid angle, typically 120 degrees wide and 15 to 20 degrees high. For all who have seen the video, I would like to add the basic construction of such antennas (see figure below):

You see the enclosures mounted on the pole on the left-hand side, and a schematic drawing next to it, showing the internal. The enclosure is what people see when they look at a cell tower. Inside you see 12 vertical beams in a 2×6 arrangement, the dipoles. These dipoles are the radiating elements. Each dipole gets only a fraction of the total transmission energy supplied to the antenna. This is where the video goes wrong. He is assuming that each dipole gets the full 20 Watts and with “thousands of dipoles” arrives at his key message. The total energy supplied to the antenna and radiated by the antenna does not change through this arrangement, though. Wave interference will have the effect that the wavefronts generated by the dipoles add up or cancel out depending on the direction. The energy is focused on the angle shown in the drawing. The 5G “Massive MIMO” technology just uses more dipoles (such as 64 in 8×8 or 128 in 12 by 8 configuration instead of just 2×6) and feeds them with dynamically delayed signals, so that the “beam” can move and sweep an area. And never ever are there “thousands of dipoles” used in antenna construction. There is no digital signal processor available today, to do the MIMO mathematics (which is complex matrix multiplication) for that many elements simultaneously and in real-time.

Antenna gain is the result of the radiation focus: The antenna in the picture has got an antenna gain of 15 dB. Which just tells you that in the main transmission direction there is 30 times more power than to the side. The total radiation supplied by the antenna remains unchanged.

By the way: The total transmission power is limited by legal and regulatory requirements. And regulatory administrations in all countries that I know of are adding up the total radiation level from a tower, and not just consider a single antenna.

Mobile Phone Perspective

Let us have a look at phone radiation, then. The phone next to your head is transmitting at a maximum about 200mW (which is 23 dBm). That is at least 10,000 times more than the signal received from the tower. Typical transmission power values of phones are a lot lower, though. The base station at the tower controls the power of the phone. It sets phone transmission power to a level so that all phone signals are received at approximately the same strength. If you are near a tower, your phone will transmit at a minimum level (which is below one milliwatt, again). Only if the reception from the tower is very bad, your phone will be commanded to increase transmission power. It may sound crazy, but: more mobile base stations mean less overall radiation levels.

The power of phone transmission has gone down since the first generations of mobile communication. In GSM phones were allowed to transmit up to 1.0W (sometimes even 2W). You may remember that 20 years ago the typical heavy user was holding the phone against the head, and making voice calls all the time. With today’s smartphones the typical user does hardly make a phone call any more, and instead is holding the phone about 1m away from the face for screen interaction.

The impact of phone radiation since the early 2000s has dramatically gone down. If there was any health effect from mobile phone radiation, we should have started to see it in the meantime. We had millions of users exposed to higher radiation than today in the past 20 years. For example, there is simply no increase in the cancer rates predicted by some people already since the year 2000. None of the studies that are always cited by the alarmist news have ever passed scientific quality reviews. They have been rejected on the basis of selection bias, too small sample sizes, and many other reasons. The WHO and national health administrations give a very critical review of these studies. If you are interested in more details about those aspects, see the following post.